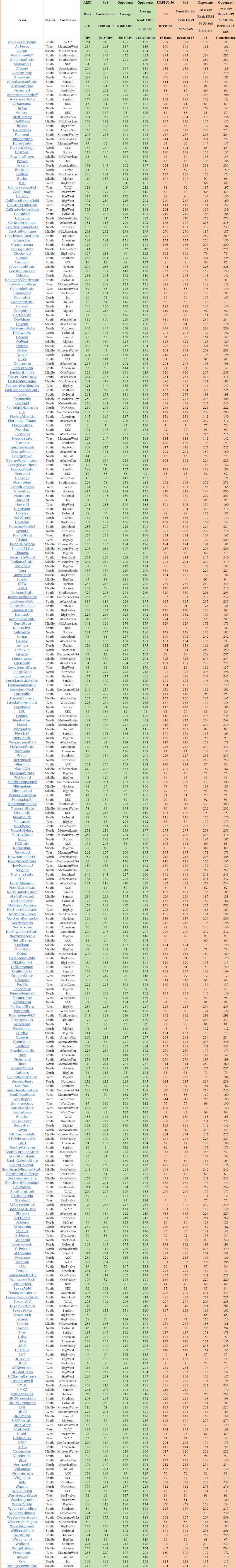

[NOTE: This report is based on the NCAA Tournament seed and at large selection process as currently mandated by the NCAA. Under the current process, the Women's Soccer Committee can use only two rating systems when making at large selections: the NCAA RPI and the KP Index (KPI). It cannot consider any other rating or ranking system. Further, as between the RPI and the KPI, the RPI must be the primary system the Committee uses. It can use the KPI only if use of the RPI and other primary criteria does not result in a decision. This report will mention two other rating systems, the Balanced RPI and the Massey ratings. These mentions only are for informational purposes. If the Committee were to use either one of those systems as a primary rating system, the pool of teams it would be considering for at large positions would be significantly different and it is highly likely this would result in teams not considered by the Committee receiving at large positions, with other teams being dropped from at large slots. It also might affect seeding.]

Over the years, the Women's Soccer Committee has been relatively consistent in the patterns it follows when making NCAA Tournament seed and at large selection decisions. This report discusses Committee decisions this year that did not exactly match the historic patterns.

South Alabama Not Getting an At Large Position

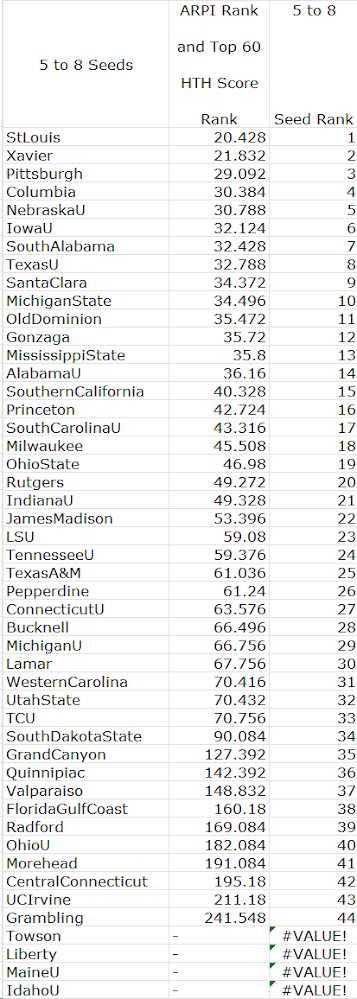

The only major change I see from historic decision patterns is the Committee not giving an at large position to RPI #27 South Alabama.

Since 2007, teams with RPI ranks of #30 or better always have gotten at large positions. Moving the bar by three positions is a big change.

#55 Colorado got an at large position. Thus the Committee concluded that in this area of the rankings, the RPI this year was off by 29 positions. Further, in the past a team ranked as poorly as #57 has gotten an at large position, suggesting the RPI can be off by as many as 31 positions.

I use 118 metrics to evaluate teams, all based on the factors the NCAA requires the Committee to use in making at large selections. Each metric has two standards. One says "yes," if a team met the standard in the past, it always got an at large position. The other says "no," if a team met that standard in the past, it never got an at large selection. South Alabama met 11 "yes" standards and no "no" standards, thus looking like a sure thing for an at large position. Ten of the "yes" standards, however, included use of South Alabama's RPI rating or its RPI rank. Only one did not: South Alabama's rank in terms of Head to Head Results Against Top 60 Opponents paired with its rank in terms of Poor Results, each weighted at 50%.

In not giving South Alabama an at large position, the Committee effectively said it did not believe South Alabama's RPI rating and rank.

Note: The RPI ranked South Alabama #27. The KPI ranked it #30. Given this small difference, it seems unlikely the KPI played a significant part in the Committee not giving South Alabama an at large position. The Balanced RPI ranked it #51, Massey ranked it #70.5, which support the Committee decision.

TCU Not Getting an At Large Position

TCU met three "yes" standards for an at large position and no "no" standards. The "yes" standards all related to TCU's good results against Top 50 opponents.

The Committee deviated from the "yes" standards by not giving TCU an at large position. The deviations were not large, however, rather moderate to small, so I do not consider this a major change from the Committee's historic patterns.

When I compare TCU's and South Alabama's opponents' ranks and their results against Top 50 opponents, I easily can imagine the Committee thinking that they could not possibly deny TCU an at large position yet give one to South Alabama. This may have contributed to South Alabama not getting an at large position.

Note: The RPI ranked TCU #54, the KPI #50. The KPI thus was not a basis for the Committee decision. The Balanced RPI ranked TCU #37, Massey #28, which suggest the Committee may have under rated it.

Providence Getting an At Large Position

Providence met no "yes" standards for an at large position and 1 "no" standard. The Committee deviation from the "no" standard was minimal.

Note: The RPI ranked Providence #42, the KPI #39. The KPI thus may have been a contributing factor for the Committee's decision. The Balanced RPI ranked Providence #50, Massey #73.5, which suggest the Committee may have over rated it.

Colorado Getting an At Large Position

Colorado met no "yes" standards for an at large position and 1 "no" standard. The Committee deviation from the "no" standard was moderate.

Note: The RPI ranked Colorado #55, the KPI #40. The KPI thus may have been a contributing factor for the Committee's decision. The Balanced RPI ranked Colorado #24, Massey #25, which support the Committee decision.

Tennessee Getting an At Large Position

Tennessee met 10 "yes" standards for an at large position and 2 "no" standards. (When at team meets 1 or more "yes" standards and one or more "no" standards, it means the team has a profile the Committee has not seen in the past.) The Committee deviations from the "no" standards were moderate to small.

Note: The RPI ranked Tennessee #32, the KPI #33. The KPI thus may have been a contributing factor for the Committee's decision. The Balanced RPI ranked Tennessee #42, Massey #40, which suggest the Committee may have over rated it.

LSU Getting an At Large Position

LSU met 2 "yes" standards for an at large position and 4 "no" standards. The Committee deviations from the "no" standards were moderate to small.

Note: The RPI ranked LSU #52, the KPI #45. The KPI thus may have been a contributing factor for the Committee's decision. The Balanced RPI ranked LSU #59, Massey #55, which suggest the Committee may have over rated it.

At Large Summary

South Alabama, with its #27 RPI rank, not getting an at large position was a major change from the Committee's historic pattern. The other Committee decisions represent moderate changes from the Committee's historic patterns, but are not unusual in relation to changes I have seen in prior years.

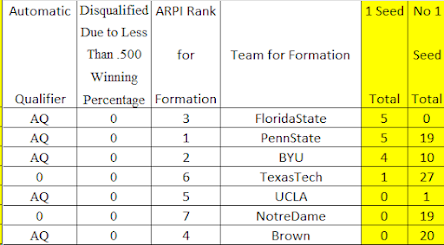

Clemson Getting a #1 Seed

Clemson met no "yes" standards for a #1 seed and 15 "no" standards. The "no" standards all related to its RPI rating or rank and/or its rating or rank in terms of its Head to Head Results Against Top 60 Opponents. The Head to Head Results factor evaluates its record in terms of wins, ties, and losses against other Top 60 opponents, without regard for where those opponents stand in the Top 60 rankings. Clemson's deviations from the standards ranged from big to small.

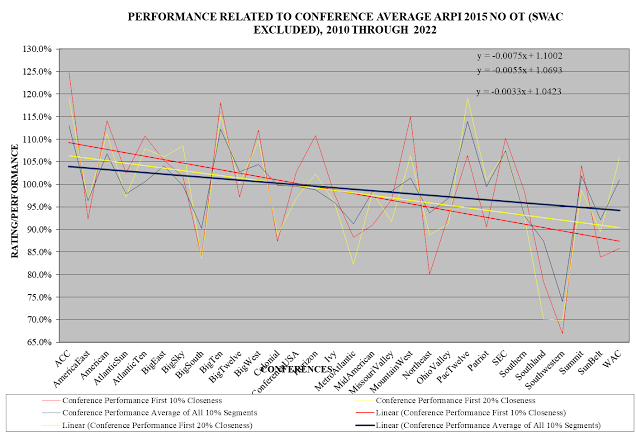

Starting in 2022, the NCAA eliminated overtimes in regular season games. As a result, the number of overtime games has doubled. The increased number of overtime games tends to pull teams' RPI ratings towards 0.500, which has a negative effect on the RPI ratings of strong teams. It also tends to reduce the calculated value of Head to Head Results Against Top 60 Opponents. An expected effect of this is that fewer teams will meet the historic seed and at large selection standards for these factors. If so, then as the Committee makes decisions over the next few years, I will need to recalibrate -- relax -- the standards to accommodate those decisions.

When I initially looked this year at how most of the teams at the top of the rankings did in relation to the historic standards, I thought that the top group seemed weaker than has been the case historically. On further consideration it also is possible that they may appear weaker because of the effect of the increased number of ties and there not yet having been enough years under the no overtime rule to allow a full recalibration of the historic standards. If this is the case, then Clemson's apparently poor profile for a #1 seed may be partly a reflection of the effect of the no overtime rule, illustrating how the historic standards will have to change to accommodate the new rule. It also may be partly a reflection of weaker than usual candidates for a #1 seed.

Note: The RPI ranked Clemson #5, the KPI #9. The KPI thus did not support the Committee decision. The Balanced RPI ranked Clemson #6, Massey #4.

BYU Getting a #1 Seed

BYU met 2 "yes" standards for a #1 seed and 14 "no" standards, which means it had a profile the Committee has not seen in the past. The "no" standards mostly related to the Big Twelve's average RPI rating and/or rank. Two of the "no" standards related to BYU's Head to Head Results Against Top 60 Opponents (see Clemson). This year, the Big Twelve was the #7 rated conference, and BYU was second in the regular season standings to Texas Tech and runner up in the conference tournament to Texas. BYU's deviations from the standards ranged from big to small.

Only three teams met "yes" standards for a #1 seed: Florida State, BYU, and UCLA. It appears that the strong aspects of BYU's profile outweighed the negative aspects in the Committee's mind. Nevertheless, the Committee made a significant departure from its past decision patterns by giving a #1 seed to a team from the #7 conference that won neither the conference regular season nor conference tournament competition.

Note: The RPI ranked BYU #2, the KPI #3. The KPI may have been a contributing factor for the Committee decision. The Balanced RPI ranked BYU #5, Massey #5.

Penn State Getting a #2 Seed

Penn State met 3 "yes" standards for a #2 seed and 8 "no" standards. The Committee's deviations from the "no" standards ranged from big to small.

The "no" standards all related in whole or in part to Penn State's Top 50 Results, which measures the value of its good results (wins and ties) against Top 50 opponents, assigns values to those results on a sliding scale based on the opponents' ranks, and overall is designed to show at how high a level the team has shown it can compete. Penn State's best results were a tie with #13 North Carolina and a win over #17 Princeton.

With Penn State meeting both "yes" and "no" standards, it had a profile the Committee had not seen in the past. Its getting a #2 seed means that in the Committee's mind, its "yes" attributes outweighed its "no" attributes. Historically, which teams will be in the group that gets #1 through #4 seeds is quite predictable, as is which teams will get #1 seeds. Where teams in the #2 through #4 group will end up in the #2 through #4 distribution is less predictable. Thus Penn State getting a #2 seed involved a significant deviation from some of the Committee's past patterns, but is not a big surprise due to the unpredictable nature of seeding in the #2 through #4 range..

Note: The RPI ranked Penn State #4, the KPI #4. The KPI thus may have been a contributing factor for the Committee's decision. The Balanced RPI ranked Penn State #4, Massey #9.

North Carolina Not Getting a #2 Seed

North Carolina met 2 "yes" standards for a #2 seed and 1 "no" standard. The Committee's deviations from the "yes" standards were small to moderate. The "yes" standards related to North Carolina's Non-Conference RPI and its Top 50 Results. Its best results were a tie with #4 Penn State, a tie with #1 Florida State, and a tie with #12 Notre Dame.

With North Carolina meeting both "yes" and "no" standards and given the relative unpredictably of how the #2 through #4 seeds will be distributed, its not getting a #2 seed is not a big surprise.

Note: The RPI ranked North Carolina #13, the KPI #14. The KPI thus may have been a contributing factor for the Committee's decision. The Balanced RPI ranked North Carolina #8, Massey #6.

Memphis Not Getting a #4 Seed

Memphis met 2 "yes" standards for a #4 seed and 12 "no" standards, thus presenting a profile the Committee had not seen before. Its "yes" standards related to its Top 60 Head to Head Results combined with its lack of Poor Results. The Committee deviations from the "yes" standards were big. The Committee apparently concluded the negative aspects of its profile outweighed its positive aspects in relation to a #4 seed. I do not consider this surprising.

Note: The RPI ranked Memphis #14, the KPI #11. The KPI thus was not a factor for the Committee's decision. The Balanced RPI ranked Memphis #15, Massey #8.

Seed Summary

Given the context of the no overtime rule being in only its second year and the possibility that most of the candidate group for #1 seeds may have been weak by historic standards, the Committee's seeds this year do not appear to involve surprising departures from its historic patterns.

Overall Summary

The Committee's not giving an at large position to the #27 RPI ranked team was a significant departure from the Committee's historic patterns and suggests significant Committee discomfort with the RPI. Other than that, the Committee's decisions were about as expected, taking into consideration the NCAA's 2022 adoption of the no overtime rule and a possibly weaker than usual group competing for #1 seeds.