Now that we know the Women's Soccer Committee's at large selections, who benefited from and who got hurt by the current NCAA RPI's defects. The following table is similar to the tables I provided in earlier posts, except that it compares the Committee's actual selections to what the likely selections would have been if the Committee had used the Balanced RPI. As discussed in the preceding reports, the Balanced RPI does not have the current NCAA RPI's defects.

The following table shows who benefited and who got hurt. I will explain below the table. First is the Key for the table, then the table.

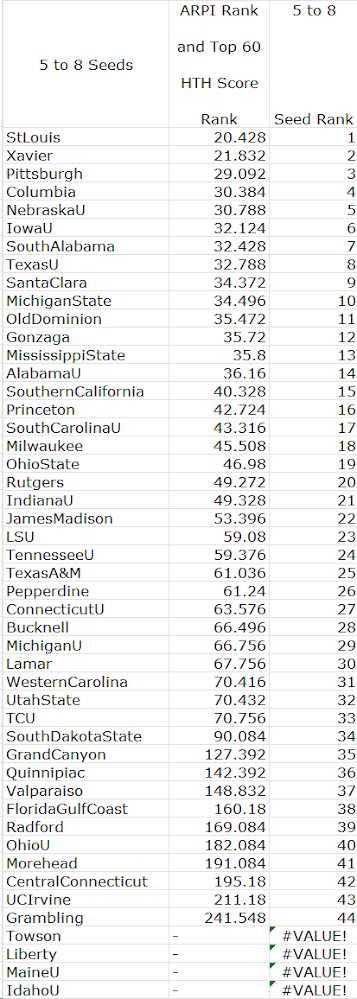

Candidates To Be Considered for At Large Positions

In the table, the first critical groups are the teams highlighted in green and red. Historically, all the Committee's at large selections have come from teams ranked #57 or better. The green highlighting is for eight teams that are in the Top 57 under the Balanced RPI but not under the current NCAA RPI. Thus these are teams that are hurt by the current NCAA RPI's defects by, effectively, being excluded from the candidate group for at large selections. The red highlighting is for eight teams that are not in the Top 57 candidate group under the Balanced RPI but are in the group under the current NCAA RPI. Thus these are teams that benefit from the current NCAA RPI's defects by being included in the candidate group for at large selections.

There is a clear difference in the natures of the two groups. The group that gets hurt by the current NCAA RPI is all Power 5 teams with one exception. The group that correspondingly benefits is all non-Power 5 teams with one exception. This is exactly as expected, since the current NCAA RPI discriminates against stronger conferences and in favor of weaker ones.

Regarding the two groups of teams, the three right-hand columns show important information. Third from the right, the column shows the average current NCAA RPI rank of the team's opponents. Second from the right, the column shows the average current NCAA RPI Strength of Schedule contribution rank of the team's opponents. On the far right, the column shows the difference between these numbers. Using Virginia as an example, the average current NCAA RPI rank of its opponents is 130. The average current NCAA RPI strength of schedule contribution rank of its opponents, however, is only 175. Thus according to the RPI itself, the formula's strength of schedule calculation understates Virginia's opponents' strength by 45 positions. Contrast this to the red group's Lamar. Lamar's current NCAA RPI opponents' average rank is 225, those same opponents' average rank as strength of schedule contributors is 199, so that according to the RPI itself, the formula overstates Lamar's opponents' strength by 26 positions. If you look at the teams as red and green groups, it is clear: The current NCAA RPI's strength of schedule problem is causing teams (red) that should not be in the at large candidate group to be in the group and preventing teams (green) that should be in the group from being there.

Selections for At Large Positions

The second critical groups are the teams highlighted C and D in the Category column. The C teams are ones that did not get at large positions this year, but that likely would have gotten at large positions if the Committee had used the Balanced RPI: Virginia, Wake Forest, Northwestern, TCU, Washington, and UCF. These are the teams that got hurt the most by the current NCAA RPI's defects. Of these, TCU and UCF are in the current NCAA RPI's Top 57, but the other four are not. The D teams are ones that did get at large positions, but that likely would not have if the Committee had used the Balanced RPI: Arizona State, Providence, Tennessee, Texas A&M, James Madison, and LSU.

For these second groups, you can see that this is not necessarily a matter of Power 5 versus non-Power 5 conferences. Rather, it is a matter of the current NCAA RPI's defects having kept deserving teams from even getting realistic consideration for at large positions both by underrating them and by overrating other teams so that they occupy the limited candidate group positions.

Bottom Line

The bottom line this year is that:

Arizona State, Providence, Tennessee, Texas A&M, James Madison, and LSU benefitted from the current NCAA RPI's defects; and

Virginia, Wake Forest, Northwestern, TCU, Washington, and UCF got hurt by the defects.