In my first two 2025 articles, I showed the NCAA RPI's defects and how they cause the RPI to discriminate against teams from some conferences and regions and in favor of teams from others. In this post, I discuss how determinative the NCAA RPI is in the NCAA Tournament at large participant selection process.

Suppose the NCAA were to award at large NCAA Tournament positions to teams based strictly on their NCAA RPI ranks. How much difference would it make from having the Women's Soccer Committee make the at large selections? In other words, how many changes would there be from the Committee's awards? Before reading further, as a test of your own sense of the NCAA RPI's importance in the at large selection process, write down what you think the average number of changes would be per year if the NCAA simply made at large selections based on teams' NCAA RPI ranks. Later in this article, you'll be able to compare your guess to the actual number.

NCAA Tournament At Large Selection Factors

The NCAA requires the Committee to consider certain factors when making its NCAA Tournament at large selections. As those of you who follow my work know, I have converted those factors into a series of individual factors and also have paired them to create an additional series of paired factors in which each individual factor has a 50% weight. Altogether, this produces a series of 118 factors. Some of the NCAA's individual factors have numerical scoring systems -- for example, the NCAA RPI and NCAA RPI Ranks -- and some do not -- for example, Head to Head Results. For those factors that do not have NCAA-created scoring systems, I have created scoring systems.

It is possible, by comparing the teams to which the Committee has given at large positions to teams' scores for a factor, to see how close the match-up is between the Committee's at large selections and the factor scores. The following table shows the factors that best match the Committee's at large selections over the 17 years from 2007 through 2024 (excluding Covid-affected 2020):

As you can see, the Committee's at large selections match teams' NCAA RPI ranks 92.6% of the time. The Committee has "overruled" the NCAA RPI 42 times (568-526) over the 17 year data period. The following table shows how the Committee overrules have played out over the years:

As the table shows, over the years, the Committee's selections have differed from the NCAA RPI ranks by from 1 to 4 positions. The average difference has been 2.47 positions per year. (This is the answer to the question at the top of this article.) The median has been 2. A way to think about this is that on average all the Committee's work has resulted in a change of only 2 to 3 teams per year from what the at large selections would have been if the NCAA RPI made the selections. This suggests that no matter what the Committee members may think, the NCAA RPI mostly controls the at large selection process, with the Committee's work making differences only at the fringes.NCAA Tournament At Large Factor Standards

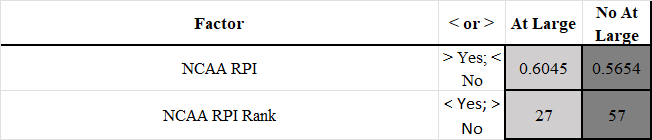

In the table, the At Large column has the "In" standards and the No At Large column the "Out" standards. Thus teams with NCAA RPI ratings better than 0.6045 always have gotten at large selections and teams with ratings poorer than 0.5654 never have gotten selections. Likewise teams with NCAA RPI ranks better than 27 always have gotten at large selections and teams with ranks poorer than 57 never have.

The table includes all NCAA RPI Top 57 teams that were not conference champion Automatic Qualifiers. It is limited to the Top 57 since no team ranked poorer than #57 has ever gotten an at large selection.

NCAA Seed or Selection: This column shows the Committee decision for each team:

1, 2, 3, and 4 are for 1 through 4 seeds

4.5, 4.6, 4.7, and 4.8 are for 5 through 8 seeds

6 is for unseeded teams that got at large selections

7 is for unseeded teams that did not get at large selections

8 is for teams disqualified from at large selection due to winning percentages belows 0.500

Green is for at large selections and red is for not getting at large selections.

NCAA RPI Rank for Formation: This is teams' NCAA RPI ranks.

At Large Status Based on Standards: This column is based on the two grey columns on the right. The first grey column shows the number of at large "In" factor standards a team has met and the second shows the number of at large "Out" standards for the team. In 2024, the Tournament had 34 at large openings. Counting down the "In" and "Out" columns, there were 31 teams that met at least 1 "In" standard and 0 "Out" standards. In the At Large Status Based on Standards column, these teams are marked "In" and color coded green. This means the standards identified 31 teams to get at large selections, leaving 3 additional openings to fill. Counting down further, there were 5 teams that met 0 "In" and 0 "Out" standards. This means those teams could not be definitively ruled 'In" but also could not be definitively ruled "Out." This means those 5 teams should be "Candidates" for the 3 remaining openings. They are marked "Candidate" and color coded yellow. And counting down further are teams that met 0 "In" standards and at least 1 "No" standard. This means the standards identified those teams as not getting at large selections. They are marked "Out" and color coded red.

Supplementing the NCAA Tournament At Large Factor Standards with a Tiebreaker

If you look at the first table in this article, you will see that the NCAA RPI Rank and Top 50 Results Rank paired factor is the best individual indicator of which teams will get at large selections. After applying the factor standards method described above, it is possible to use Candidate teams' scores for this factor as a "tiebreaker" to decide which of those teams should fill any remaining at large openings.

The following table adds use of this tiebreaker to the factor standards method for the 2024 season:

In the table, the "NCAA RPI Rank and Top 50 Results Rank As At Large Tiebreaker" column shows teams' scores for that factor, The lower the score, the better. In the At Large Status Based on Standards and Tiebreaker Combined column, the "In" green cells are for teams that get at large selections based on the Standards plus those teams from the Candidates that get at large selections based on the Tiebreaker. If you compare these to the actual NCAA Seeds or Selections on the left, you will see that the Standards and Tiebreaker Combined at large selections match the actual selections for all but 1 at large position.

In relation to the power of the RPI in directing Committee decisions, it is important to note that the Tiebreaker is based on teams' NCAA RPI Ranks and their ranks based on their Top 50 Results. Teams' Top 50 Results scores come from a scoring system I developed based on my observations of Committee decisions. The scoring system awards points based on good results -- wins and ties -- against opponents ranked in the NCAA RPI Top 50, with the awards depending on the ranks of the opponents and heavily slanted towards good results against very highly ranked opponents. Since the Top 50 Results scores are based on opponents' NCAA RPI ranks, even this part of the Tiebreaker is NCAA RPI dependent.

The following table adds to the preceding tables an At Large Status Based on NCAA RPI Rank column to give an overall picture of how the different "selection methods" compare -- the Committee method, the NCAA RPI rank method, and the Standards and Tiebreaker Combined method.

Summary Data

The following table shows a summary of the data for each year:

In this table, I find the color coded information at the bottom in the High, Low, Average, and Median rows most informative. The information in the green column shows the difference between what the Committee has decided on at large selections over the years as compared to what the decisions would have been if the NCAA simply used the NCAA RPI. The information in the salmon column shows what the difference would have been -- about 1 1/3 positions per year, with a median of 1 -- if the NCAA used a more refined method than the NCAA RPI, but one stilll very heavily influenced by the NCAA RPI.

Altogether, the numbers suggest that the NCAA RPI exerts an almost determinative influence on which teams get NCAA Tournament at large positions. This does not mean the Committee members think that is the case, they may believe that they are able to value other factors as much as or even more than the NCAA RPI. But whatever the individual members think, the numbers suggest that the Committee as a whole is largely under the thumb of the NCAA RPI.

Given the fundamental flaws of the NCAA RPI, as discussed in 2025 Articles 1 and 2, the near-determinative power of the NCAA RPI in the NCAA Tournament at large selection process is particularly disturbing.